How Convolutional Neural Networks Use Shift Equivariance to Recognize Patterns

If you’ve ever wondered why convolutional neural networks (CNNs) are so powerful — especially when it comes to image recognition — it boils down to some very special math properties, like localization and shift equivariance. Let’s unpack what that means in everyday terms.

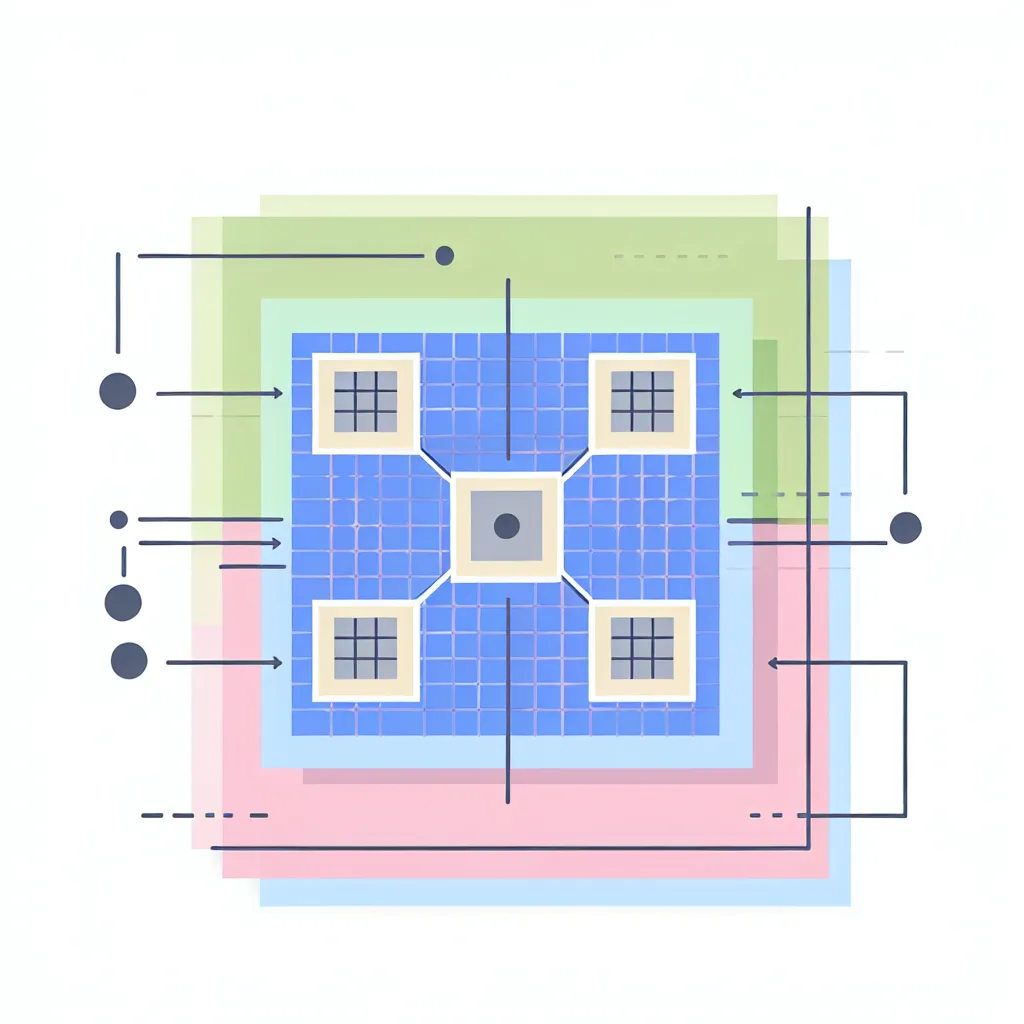

At the heart of CNNs is this idea that the layers perform operations that are shift-equivariant linear operators. What’s that? Imagine you have an image. If you shift (or translate) the image slightly and then apply the CNN operation to it, the result is basically the same as if you first applied the CNN and then shifted the output. This property is called shift equivariance.

Why does that matter? Well, it means CNNs are really good at spotting patterns, no matter where they occur in the image. This is why CNNs excel at recognizing objects whether they’re in the top left corner or right in the center.

Technically, each layer of a CNN applies a linear operation (think of it like a filter) followed by a nonlinearity (like a squish function that helps the network learn complex patterns). The linear operator here has a neat feature: it satisfies the equation ( T(\tau_x f) = \tau_x (T f) ), where ( \tau_x ) is just shifting the input. This basically means the operator “commutes” with shifts, or in simpler terms, the operation doesn’t care where the pattern is located.

Because of this, the linear operation is actually a convolution. All linear, shift-equivariant operators are convolutions — that’s not just a lucky coincidence but a deep algebraic principle called the Convolution Theorem.

What does this mean in practice? CNNs can efficiently learn patterns that have this kind of symmetry constraint, making them powerful and efficient for tasks like image and video recognition. Instead of having to learn a separate filter for each position in the image, the convolution shares weights across all positions. This weight sharing is a big reason CNNs are both less complex and more effective than other types of neural networks for many visual tasks.

If you want to understand more deeply, the Convolution Theorem, which is a foundational concept in signal processing and mathematics, states that convolution in one domain (like time or space) corresponds to multiplication in another (frequency). The theorem reinforces why convolution operations naturally model shift-invariant or shift-equivariant processes.

For those curious to dive into the math behind this: check out resources like the Stanford CS231n course for an excellent deep dive into CNNs, or the MIT OpenCourseWare for visual computing which covers convolutional operators and their properties.

To wrap up, understanding that CNNs are filters designed to spot shifted versions of the same pattern helps explain why they work so well and why convolutional layers have become the backbone of modern image processing and computer vision.

So next time you enjoy your photo app automatically tagging things or your favorite smart assistant interpreting images, you can think about the convolutional neural networks quietly doing their shift-equivariant magic behind the scenes.

Key Takeaways About Convolutional Neural Networks

- CNNs use linear operators followed by nonlinear activation functions.

- The linear operators in CNNs are shift-equivariant, meaning the operation respects spatial translations.

- Mathematically, these linear, shift-equivariant operators must be convolutions (thanks to the Convolution Theorem).

- This property lets CNNs share weights across the image, making pattern recognition efficient and robust.

Keep exploring and you might find yourself seeing the world through the lens of convolutions and patterns!