Understanding artificial general intelligence and how its definition might change with perspective

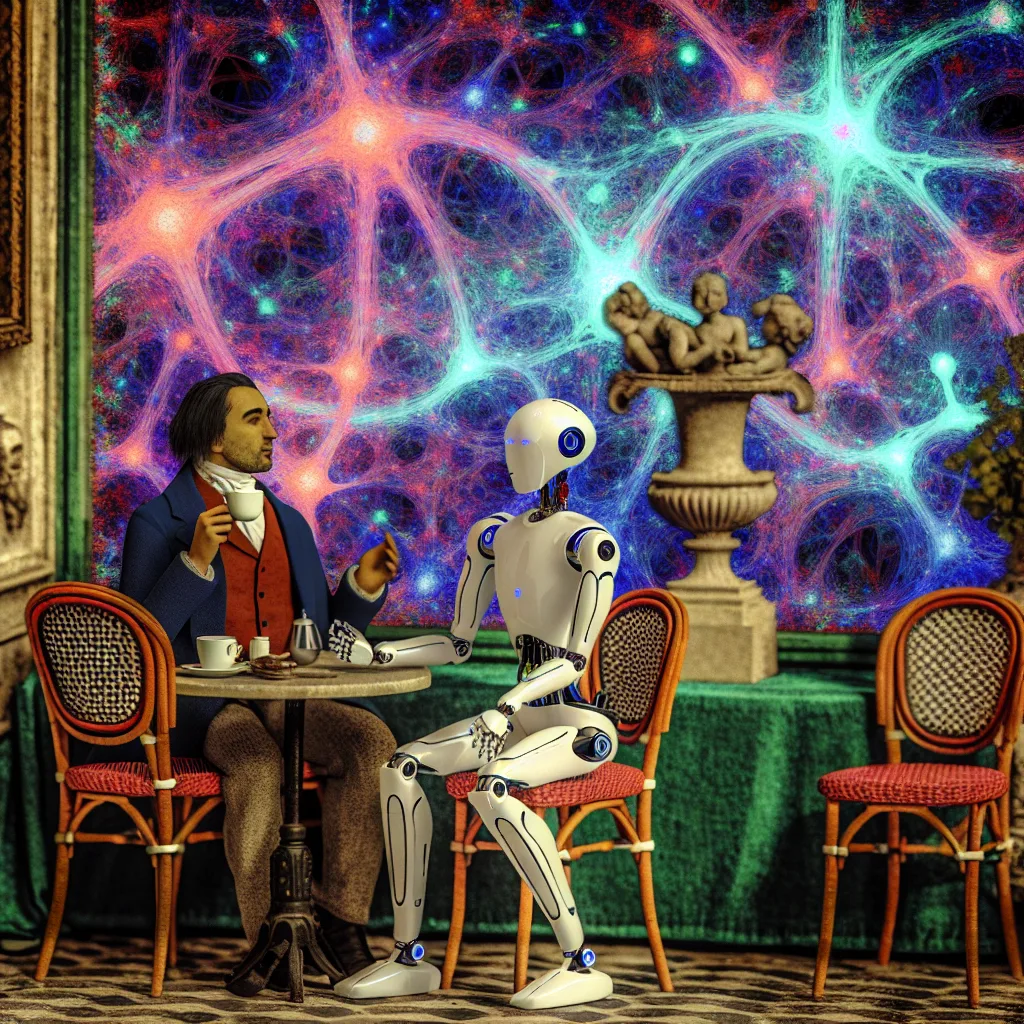

Have you ever wondered what it really means when people talk about artificial general intelligence (AGI)? It sounds impressive, but what does AGI actually look like? If we all had an IQ of 80, would current AI be considered AGI? That question gets at something deeply interesting: intelligence itself might be subjective, depending on your baseline.

The idea of artificial general intelligence is that it can perform any intellectual task a human can. But what if the standard was an extraterrestrial civilization ten times more intelligent than us? Would our definition of AGI change? It shows that the concept of artificial general intelligence depends a lot on context and expectations.

Is Intelligence Measured by a Threshold?

We often think of intelligence as a clear-cut benchmark, but setting a threshold for AGI might be more subjective than we realize. Imagine you set an IQ bar at 100 for AGI — but what if other beings measure intelligence differently or start at a much higher level? That makes the whole idea of artificial general intelligence quite slippery.

The Role of Self-Improvement in Artificial General Intelligence

One key ingredient often discussed for true artificial general intelligence is the ability to self-improve without limits. But does AGI have to be capable of unlimited self-improvement? Or is some level of self-improvement enough? Most current AI can learn and adapt, but they don’t rewrite their own code completely. True AGI might need that kind of open-ended growth to match or surpass human intelligence in every way.

Why Context Matters in Defining Artificial General Intelligence

The points above bring us to a fascinating idea: artificial general intelligence isn’t just about a fixed level of intelligence. It’s about adaptability, learning, and potentially surpassing human capabilities. But how do we define it across different points of view?

- For humans, AGI might flag when an AI thinks as flexibly as we can.

- For a civilization smarter than us, the bar might be much higher.

This makes AGI less of an absolute state and more of a spectrum, shifting based on needs, abilities, and context.

What Does This Mean for Us?

Understanding artificial general intelligence as a subjective and evolving concept pushes us to think differently about the tech we build. It reminds us to stay curious and open-minded about how intelligence and learning might look in future machines.

If you’re curious to dive deeper, consider reading more about the development of AI and what researchers say about artificial intelligence limits. These provide solid, grounded insights as we explore what it really means for AI to be “intelligent.”

Artificial general intelligence isn’t a clear finish line but more of a moving target — one that could reshape how we understand intelligence itself. Next time someone talks about AGI, remember it might just depend on who’s doing the measuring!