Let’s explore the fascinating cat-and-mouse game of AI image detection and see if a machine can really be taught to spot a fake.

A friend and I were looking at a photo online the other day—a stunning, hyper-realistic landscape that seemed too perfect to be real. “Is that AI?” he asked. I honestly couldn’t tell. It got me thinking about a fascinating puzzle: if AI can create images that are indistinguishable from reality, could we use that same technology to catch the fakes? This whole idea of AI image detection is more than just a passing thought; it’s becoming one of the most interesting and important challenges in tech today.

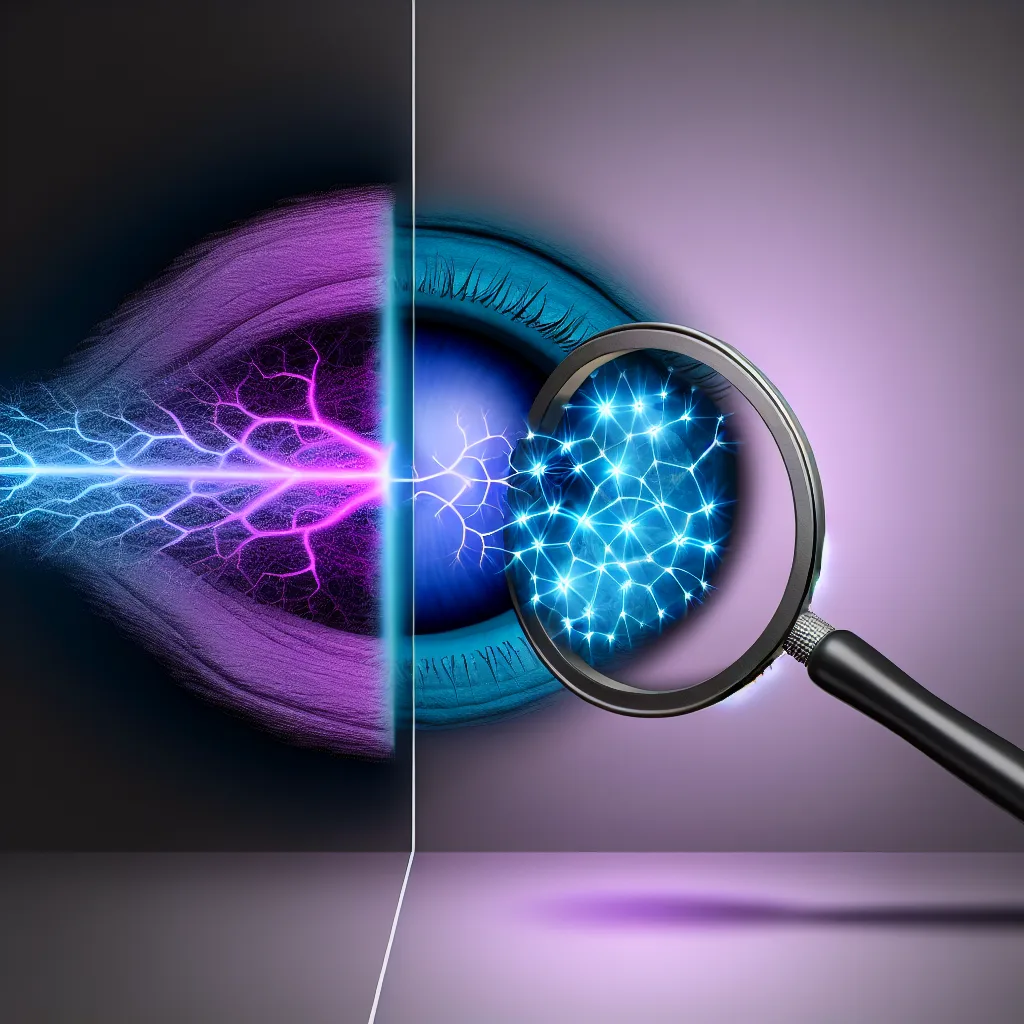

It’s a digital cat-and-mouse game. Every time we think we’ve found a way to spot a generated image—maybe the weird six-fingered hands or the strangely smooth skin—the models get an update, and those little imperfections vanish. The game is constantly evolving, and the fakes are getting better at an incredible speed. So how do you build a detective that can keep up?

Understanding the AI Image Detection Challenge

So, why is this so hard? It’s because AI image generators aren’t just copying and pasting pixels. They are learning the very essence of what makes a picture look real. They study millions of real photos to understand light, shadow, texture, and context. When they create an image, they’re not just mimicking reality; they’re generating a brand new set of pixels that, to our eyes, follows all the rules of a real photograph.

Early on, you could look for clues:

* Gibberish Text: AI struggled to form coherent letters in the background.

* Unnatural Patterns: Repeating textures on clothing or in nature that looked just a little too perfect.

* Logical Flaws: Reflections that didn’t quite match up or shadows falling the wrong way.

But the latest models are much better at avoiding these simple mistakes. That’s why the solution can’t just be a simple checklist for humans. We need a system that can see the invisible, statistical fingerprints left behind during the generation process.

How Can We Train an AI to Be a Digital Detective?

This brings us back to the core idea. If a machine can learn to create, it can also learn to critique. The concept is surprisingly intuitive and is based on a model that’s been used in AI for years: Generative Adversarial Networks, or GANs.

As explained in this great overview by NVIDIA’s AI blog, a GAN has two parts working against each other:

1. The Generator: This is the artist. Its job is to create fake images that look as real as possible.

2. The Discriminator: This is the detective. Its job is to look at an image and decide if it came from the Generator or from the real world.

Every time the Discriminator correctly spots a fake, the Generator learns and gets better. Every time the Generator fools the Discriminator, the Discriminator learns and gets better. This back-and-forth training makes both of them incredibly sophisticated. For AI image detection, we’re essentially just interested in building a super-powered Discriminator. We’d feed it a massive dataset with millions of images, half real and half AI-generated, and train it to recognize the subtle, almost imperceptible patterns that define a generated image.

Is Anyone Actually Doing This?

Yes! This isn’t just a theoretical project anymore. It’s a full-blown field of study. Researchers are publishing papers on new detection techniques on platforms like arXiv.org, and tech companies are developing tools to do just that.

Intel, for example, has been working on a technology called FakeCatcher that analyzes the subtle changes in blood flow in the pixels of a person’s face to determine if a video is real or a deepfake. It’s an incredible approach that looks for authentic human biological signals.

However, the challenge is that as soon as a new detection method is created, AI image generators can be trained specifically to beat it. It’s a never-ending cycle. The goal may not be a perfect, 100% accurate detector, but rather a tool that works well enough to add a layer of verification and trust back into our digital world.

So, the next time you see a photo that feels just a little too perfect, you might be right to be skeptical. While our eyes might be fooled, the future of digital trust may lie in training a new kind of AI—one that’s less of an artist and more of a detective.