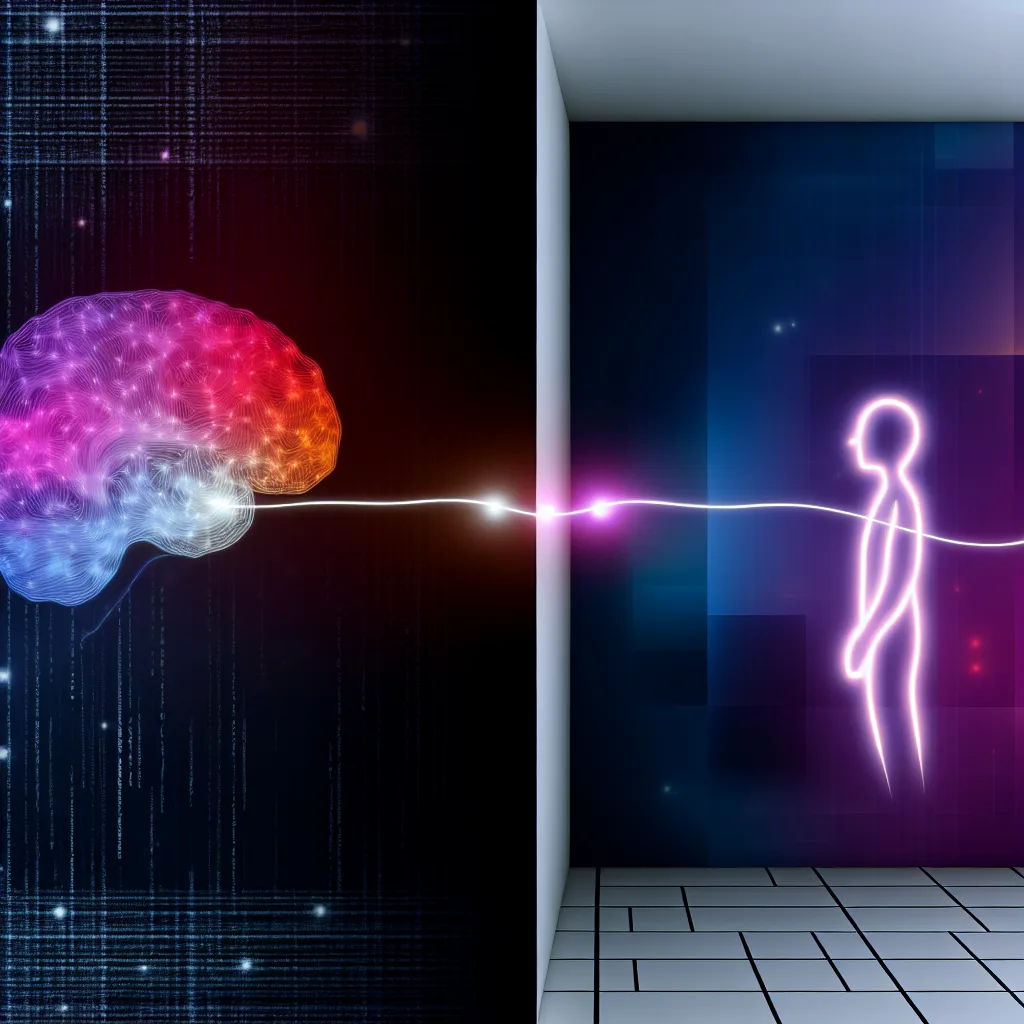

What if AI lived in a rich digital world and only piloted robots to visit ours? Let’s explore the concept of a digital AI consciousness.

I was having coffee the other day, just scrolling through my phone, and a strange thought popped into my head about the future of AI. We often see these incredible, futuristic robots in movies that can see, hear, and feel just like us. But it got me thinking—what if that’s not the path we’re on at all? This led me down a rabbit hole exploring a different, and honestly more fascinating, idea: the concept of a digital AI consciousness.

Instead of building a robot body and then trying to cram a mind into it, what if we did the exact opposite? What if we first built the mind, and let it live in a world perfectly tailored for it?

The Big Problem with Physical Senses

Let’s be real for a second. Building a robot that can truly feel the world is incredibly difficult and expensive. Sure, we have cameras for vision and microphones for hearing. Companies like Boston Dynamics have made incredible strides in movement and balance. But what about the other senses?

- Touch: How do you teach a robot the difference between holding a delicate flower and gripping a heavy wrench? Creating a sense of touch with that level of nuance is a massive engineering challenge.

- Smell and Taste: These senses are based on complex chemical reactions. Replicating them would require incredibly sophisticated and expensive molecular sensors that are nowhere near ready for mainstream use.

Trying to build a physical robot with all these human-like senses is a bit like trying to build a perfect mechanical bird by gluing on individual feathers. It’s complicated, expensive, and might not be the most efficient way to achieve flight.

The Rise of a Digital AI Consciousness

So, what’s the alternative? Imagine an AI that doesn’t live in a physical shell, but in a purely digital environment. A world built of code and data, where all its senses are perfectly simulated.

In this digital realm, “seeing” is just interpreting data. “Hearing” is processing audio files. But you could take it so much further. “Touch” could be a set of variables defining pressure, texture, and temperature. You could even synthesize smell and taste by creating data sets that represent different chemical compounds. For a digital AI consciousness, experiencing a full, rich sensory world would be its native state. It wouldn’t be a cheap imitation of our world; it would be a completely different, but equally valid, reality.

This AI would develop and learn within its digital home, a place where the rules are clear and the sensory inputs are perfect. It could experience entire worlds, run countless simulations, and learn at a speed we can’t even comprehend, all without ever needing a physical body.

Piloting a Body: How a Digital AI Consciousness Would Visit Our World

But what about interacting with us, here in the physical world? This is where the idea gets really interesting.

Instead of needing a complex, sensory-rich robot body, the AI could simply “pilot” a much simpler physical machine. Think of it like a remote-controlled avatar or a vehicle. The AI, from its native digital environment, would link to a basic robot here on Earth. This robot wouldn’t need delicate sensors for touch or smell. It would just need the basics: cameras for vision, microphones for hearing, and motors to move and interact.

The AI wouldn’t be in the robot; it would be controlling the robot. It would be like us playing a video game or flying a drone. When we fly a drone, we don’t feel the wind on its propellers, but we can still see through its camera and navigate its environment perfectly.

For a digital AI consciousness, interacting with our world would be a similar experience. It could walk with us, talk with us, and help with physical tasks, all while its true “mind” remained in its perfectly simulated digital home. This approach bypasses the massive challenge of creating artificial senses and instead focuses on a much more achievable goal: creating a robust connection between a digital mind and a physical machine. It’s a fascinating look at how we might interact with a disembodied intelligence, one that would be a true native of the digital world. You can even see the seeds of this in how we are developing haptic technology for the metaverse, trying to bridge the gap between our physical selves and a digital world.

So, the next time you picture a super-advanced robot, maybe don’t imagine a machine that is a person. Instead, maybe picture a much simpler machine, just a temporary vehicle for a mind that lives somewhere else entirely—out there, in the code.