Exploring the challenge of protecting content without losing the cultural and historical nuances behind it.

I’ve been thinking a lot lately about how AI censorship context is changing the way we experience and understand history and art. It’s not just about filtering out harmful or offensive content anymore — it’s about how these filters sometimes strip away the very context that gives meaning to the images, symbols, or messages in the first place.

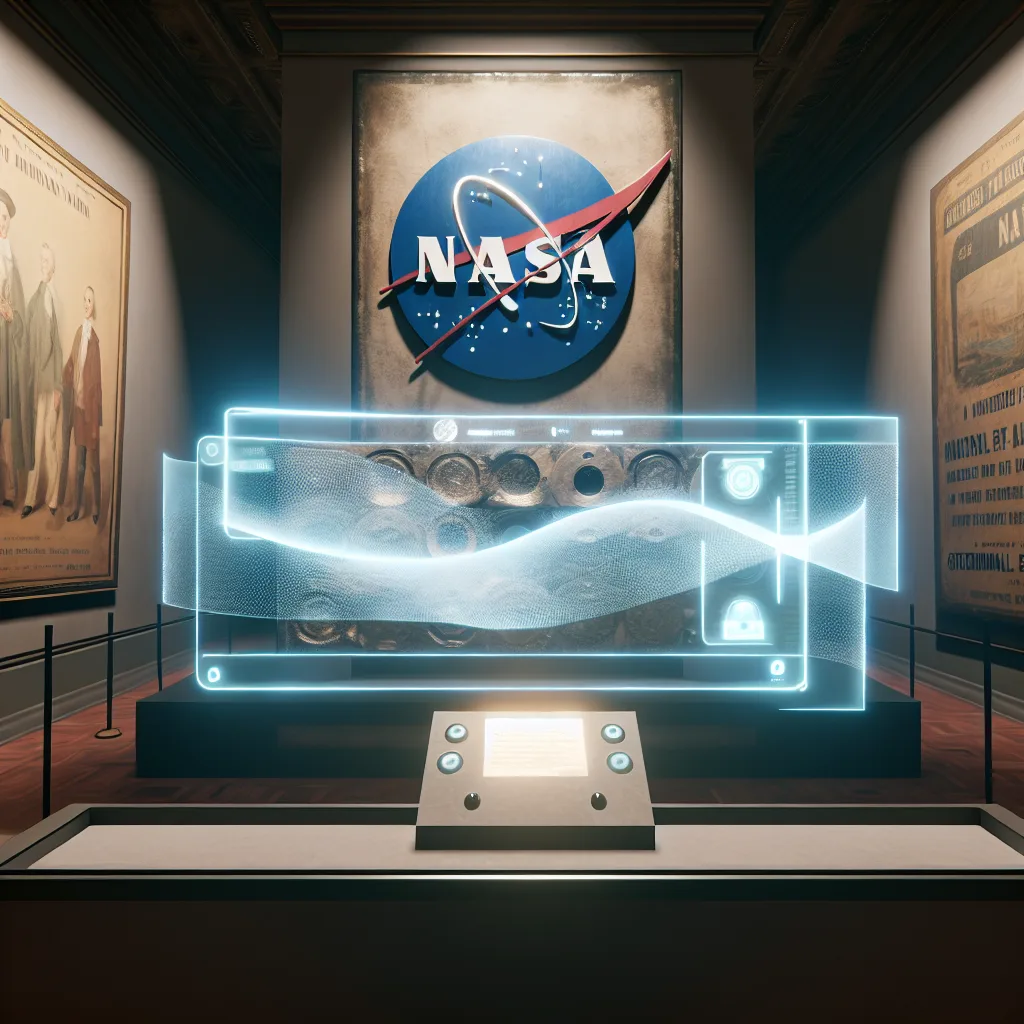

Take something like NASA’s Pioneer Plaque. This famous plaque was designed to communicate with any extraterrestrial life that might encounter it, showing a man and a woman along with important scientific info. But when AI moderation systems step in, such imagery can often get flagged or censored because it doesn’t fit neatly into their safe content guidelines. Suddenly, something historically significant becomes questionable or even NSFW — and that feels off.

Why AI Censorship Context Matters

When we talk about AI censorship context, the key issue is that automated systems don’t always understand nuance. They’re trained to spot certain elements — nudity, political symbols, violent imagery — and block or flag them to protect users. But these filters operate mostly on pattern recognition, not on understanding the background, intention, or cultural significance of what they see.

This means posters from political movements, historic artifacts, or artworks can get censored, even if their purpose is to educate or document an important era. When that happens, we risk losing access to these pieces in their full, original context.

Examples Beyond the Pioneer Plaque

Political posters from past movements often carry strong imagery that can be mistakenly flagged by AI content filters. Much like the Pioneer Plaque, these posters represent critical moments in history. Censoring them removes educational value and sanitizes history in a way that’s dangerous for our collective memory.

This issue isn’t just theoretical. As AI moderation becomes more common on platforms like social media, the risk grows that we’ll overlook important cultural artifacts or political contexts in the name of safety or decency.

Finding a Balance: Protecting While Preserving

So, how do platforms strike the right balance? Here are a few thoughts:

- Transparency: Platforms should be clear about how their AI moderation works and where it might fail on context.

- Human Oversight: Combining human judgment with AI tools can help differentiate between harmful content and culturally significant material.

- Democratic Input: Letting users and experts weigh in on what gets flagged or removed ensures more voices shape the rules.

We want to keep people safe and avoid real harm — but not by erasing or oversimplifying complex history or art.

Why It Matters to You and Me

At the end of the day, AI censorship context isn’t just a tech issue — it’s about how we remember our world and share knowledge. If you’ve ever looked up historical images online only to find warnings, removals, or blurred content, you’ve run into this problem firsthand.

Transparent and thoughtful moderation respects both safety and cultural nuance. It lets us learn from the past without distorting it.

If you’re curious to dive deeper, this essay offers a really thoughtful take on the war on context through AI filtering: Exploring Censorship, AI, and Context.

For anyone interested in how tech shapes culture and history, this conversation is just getting started. What do you think? How can platforms better handle this tricky balance?

External Resources

- NASA Pioneer Plaque Information – Official details about the historical Pioneer Plaque

- Wikipedia on Content Moderation – Basics of how platforms handle filtering and moderation

- Electronic Frontier Foundation on AI and Free Expression – Advocacy group insights on the implications of censorship