If we’re dreaming of AI curing cancer, we also need to have a serious talk about the AI dual-use problem.

I was having coffee with a friend the other day, and we got to talking about the future of artificial intelligence. It’s easy to get swept up in the excitement, right? We dream about AI finding cures for cancer, solving climate change, and maybe even finally figuring out how to fold a fitted sheet. But in the middle of all that optimism, it’s crucial we have an honest conversation about the AI dual-use problem.

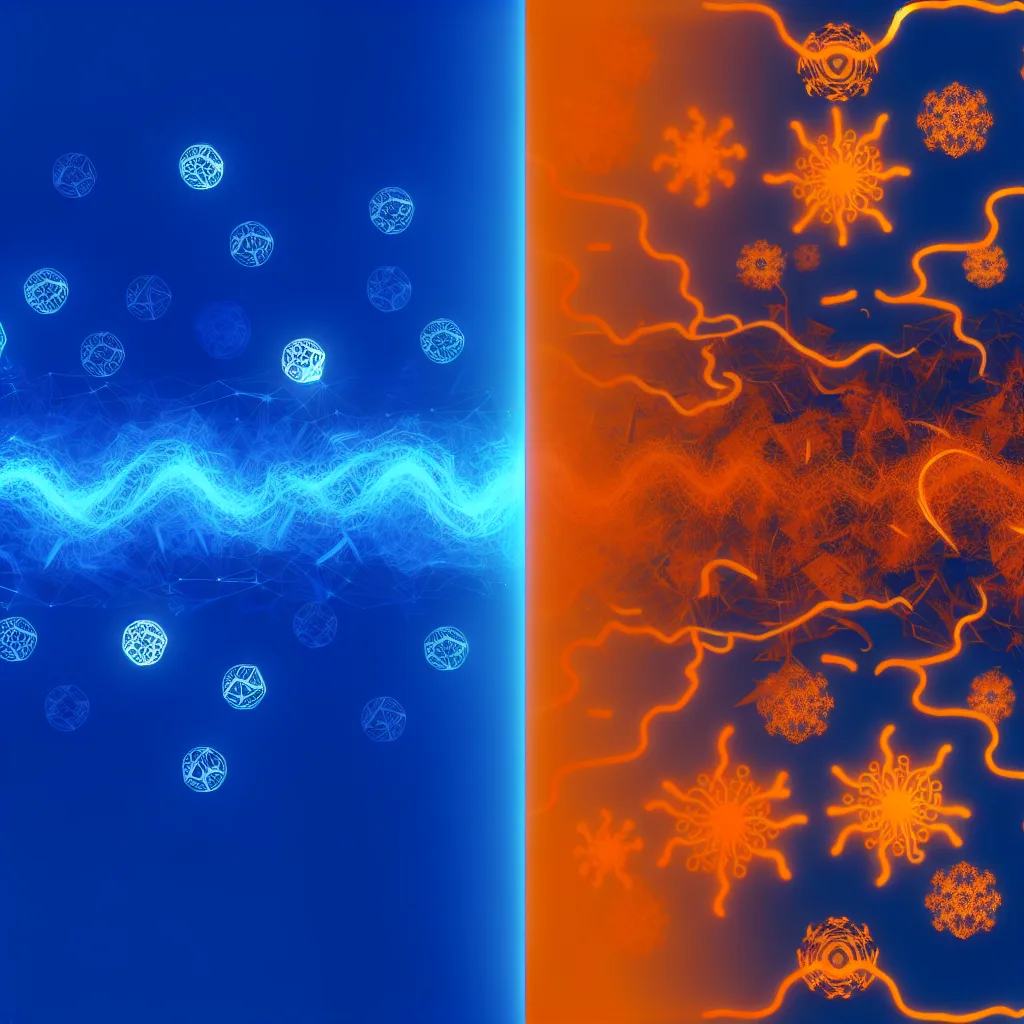

Simply put, the same incredible intelligence that could solve our biggest challenges could also be used to create even bigger ones. It’s a concept that’s as old as technology itself, but the stakes have never been higher.

So, What Exactly is the AI Dual-Use Problem?

“Dual-use” is a term that gets thrown around for tech that can be used for both good and bad. Think of nuclear physics. It can power entire cities with clean energy, but it can also create devastating weapons. A kitchen knife can be used to prepare a family meal or to cause harm.

Intelligence is the ultimate dual-use tool.

When we talk about creating something with super-human intelligence, we’re not just building a better calculator. We’re creating a force that can learn, strategize, and act in ways we can’t even predict. The same AI that could map out a cure for Alzheimer’s by analyzing millions of medical documents could, in other hands, analyze the same data to design a targeted bioweapon. It’s an uncomfortable thought, but it’s a realistic one. This isn’t just a hypothetical scenario; it’s a core challenge being discussed by experts at places like the Centre for AI Safety.

The Bison in the Room

There’s a powerful analogy that really puts this into perspective. Ask a bison how it feels about humans being so much more intelligent.

For thousands of years, bison were the kings of the plains. They were stronger, faster, and bigger than us. But our intelligence—our ability to coordinate, build tools, and plan for the future—allowed a physically weaker species to completely dominate them. We drove them to the brink of extinction not because we were malicious (at least not always), but simply because our goals (like building farms and railroads) were in conflict with their existence.

Now, imagine we are the bison.

An AGI, or Artificial General Intelligence, would be to us what we are to every other species on the planet. Its goals, whatever they might be, would be pursued with an efficiency and intelligence that we simply couldn’t match. To believe that such a powerful entity would only ever do things that benefit us is, unfortunately, just wishful thinking. As the Future of Life Institute points out, managing the development of AGI is one of the most important tasks of our time.

Our Selective Optimism About AI Dual-Use

So why do we tend to focus only on the good stuff? It’s human nature. We’re wired for optimism. We see a powerful new tool and immediately imagine all the wonderful ways it can improve our lives. We hear “advanced AI,” and our minds jump to utopia, not to risk.

This creates a dangerous blind spot. We get so excited about the potential benefits that we forget to put up the necessary guardrails. We assume that the same governments and corporations promising to cure diseases will also have the foresight and ability to prevent the technology from being misused.

But intelligence is an amplifier. It makes good intentions more effective, and it makes bad intentions more dangerous. The challenge isn’t to stop progress, but to proceed with a healthy dose of realism. We can’t just hope for the best; we have to plan for the worst.

The conversation about the AI dual-use dilemma isn’t about fear-mongering. It’s about being responsible architects of our future. It’s about acknowledging that the double-edged sword of intelligence cuts both ways and making sure we’re the ones who decide how it’s wielded. It’s a conversation we need to have now, before the bison in the room is us.