Considering the FS S3260-10S for your homelab? A detailed look at my plan, the key questions, and whether it’s the right 10GbE switch for you.

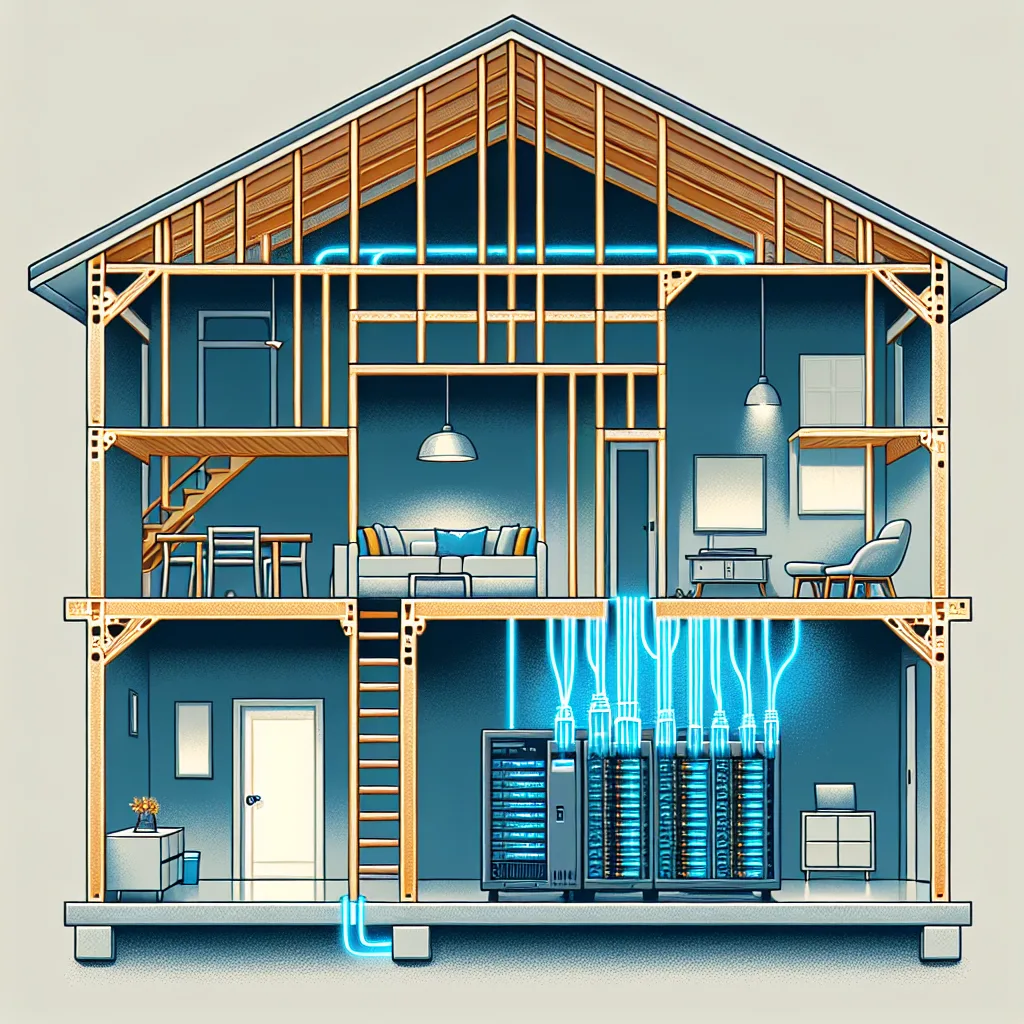

I think I’m ready for a network upgrade. My homelab has been running happily on 1GbE for a while, but with bigger files, faster servers, and more complex projects, the old network is starting to feel like a bottleneck.

So, I’ve been hunting for a 10GbE switch that fits the bill: powerful enough for my needs, but not so loud it drives me out of the room. And honestly, it needs to be affordable.

That search led me to the FS S3260-10S. On paper, it looks almost perfect. It has ten 10GbE SFP+ ports and a couple of standard 1GbE RJ45 ports for good measure. That mix of ports feels just right for what I have in mind.

Here’s My Plan

My setup is a mix of old and new gear, which is pretty common for a homelab. I need a switch that can bring it all together.

Here’s the connection plan I’ve mapped out:

- My Main Server: I have a Dell PowerEdge T360 with a 10GBase-T network card. I’d connect this using an SFP+ to 10GBase-T transceiver module in the switch. This server handles the heavy lifting, so it needs the full 10GbE speed.

- My Compact Powerhouse: I also run a Minisforum MS-01. It’s a fantastic little machine with a native SFP+ port. For this one, a simple DAC (Direct Attach Copper) cable should do the trick. Quick, easy, and reliable.

- The Backup Server: My trusty backup server is still on 1GbE for now. I’ll upgrade it to 10GbE eventually, but for now, I can just use one of the switch’s 1GbE RJ45 ports. No need to overcomplicate things.

- The Uplink: Finally, I need to connect this new 10GbE switch back to my main core switch, a FortiSwitch 124F. I’ll use the other 1GbE port for that uplink.

This setup seems solid. It gives my key servers the 10GbE speeds they need while still connecting to the rest of my network. But before I click “buy,” I have a few questions that I need to think through.

The Big Questions on My Mind

A spec sheet can only tell you so much. What I really want to know is how a piece of gear performs in the real world, especially in a home environment.

1. Is it stable?

This is the most important question. A network switch has to be reliable. If it crashes, the whole lab goes down. I need something I can set up and then forget about. I’m not looking for another device that needs constant babysitting.

2. How loud is it, really?

Homelabbers know the struggle. Enterprise gear is often powerful but sounds like a jet engine. My lab is in my office, not a dedicated server room. So, noise is a huge factor. The product page says it has “smart fans,” but that can mean a lot of things. Is it a quiet hum, or a distracting whine? This could be the dealbreaker.

3. Will my gear play nice with it?

I’m planning to use a mix of transceivers and cables. A DAC cable for one server, an SFP+ to RJ45 module for another. Some switches are notoriously picky about the modules you use, sometimes locking you into their own expensive brand. I need to know if the FS S3260-10S is flexible and will work well with third-party gear. Nobody has time to troubleshoot compatibility issues.

4. Is the software decent?

I don’t need a super-complex feature set, but I do need to handle the basics without pulling my hair out. I plan on setting up a few VLANs to keep my server traffic separate from my main network traffic. Is the FSOS web interface intuitive? Is the command-line interface (CLI) logical and easy to use for basic L2/L3 routing and VLAN tagging? A clunky interface can turn a simple task into a frustrating ordeal.

My Final Thoughts

After laying it all out, the FS S3260-10S still feels like a really strong contender. It has the right ports, a reasonable price, and the features I need for my homelab’s next chapter.

But those lingering questions about reliability, noise, and usability are what I’m chewing on now. It’s one thing to read about a switch, and another to live with it. I’m going to do a bit more digging, but I have a feeling this might just be the switch that ties my whole 10GbE homelab upgrade together. If you’ve used one, I’d love to hear your thoughts.