A friendly dive into how batches work in training neural networks, breaking down forward and backward passes

If you’ve ever wondered how a batch neural network processes data during training, you’re not alone. When training neural networks, the concepts of forward and backward passes are key—but processing data in batches adds complexity that’s worth understanding. Today I want to share a straightforward explanation that breaks down what actually happens behind the scenes when we train models in batches.

What Happens in a Batch Neural Network?

Simply put, a batch neural network processes groups of data points together instead of handling each data point one by one. This grouping is called a “batch.” The primary benefit? Efficiency. When you process batches, especially on modern hardware like GPUs, operations can be done in parallel which speeds things up significantly.

Forward Pass in Batches: Matrix Multiplication Magic

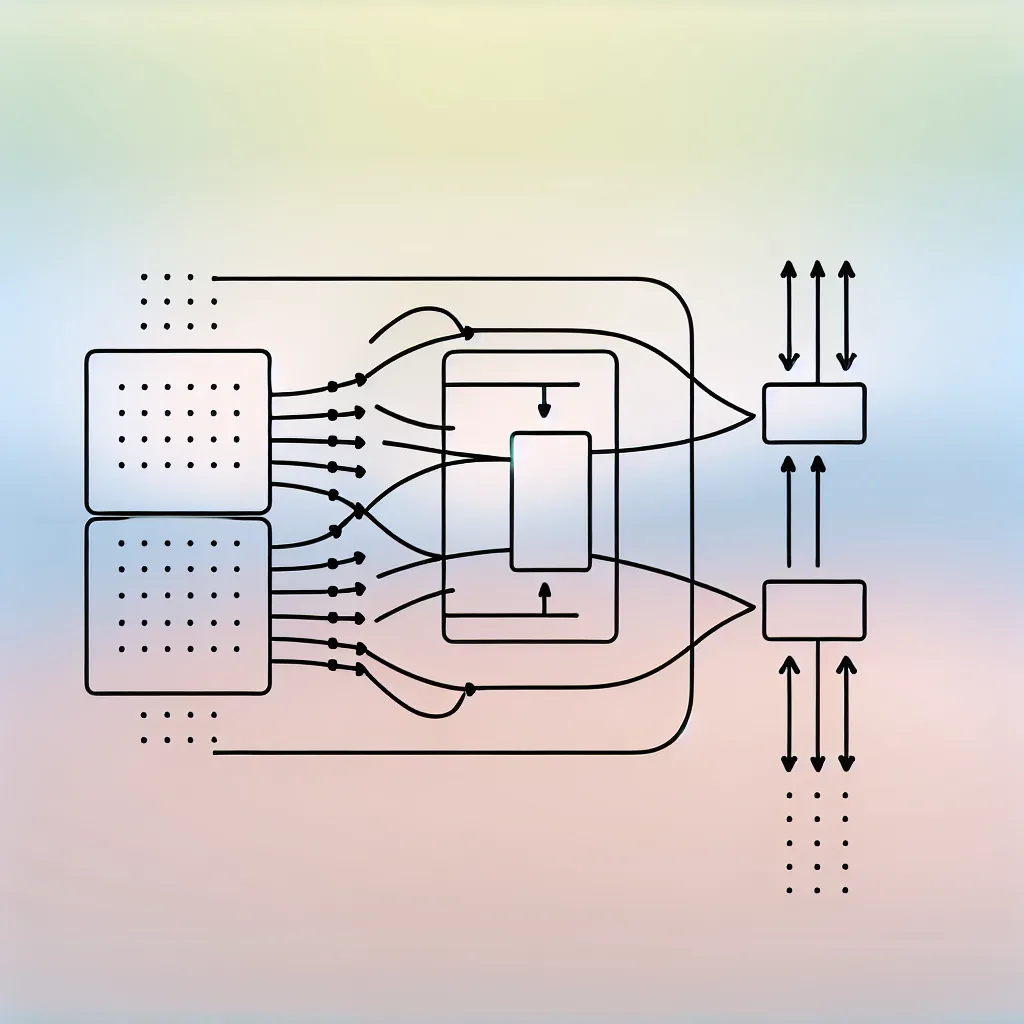

In a typical batch of data points—say 10 examples—they aren’t processed sequentially one at a time. Instead, they’re combined into a matrix. Imagine each data point is a row in this matrix. This entire batch matrix is then multiplied at once by the network’s weight matrix during the forward pass. This means the network simultaneously calculates the output for all examples in the batch.

This batch matrix multiplication replaces looping through data points individually, making the forward computation fast and efficient. It’s like doing 10 operations in one go rather than 10 separate calculations.

Calculating Loss Across a Batch

Once the network outputs predictions for all the batch examples, the loss function compares these predictions to the actual labels. The loss is computed across the batch, usually as the average loss for the entire batch. This cumulative loss measure balances the training so no single example dominates the learning, offering a smoother error signal for the network to learn from.

Backward Pass in Batches: Updating With an Eye on All Data Points

The backward pass is where the network learns by updating its weights based on the loss gradients. Because the loss was computed for the whole batch, the gradient is also calculated as a batch operation using the chain rule in calculus, but applied to matrices.

Just like the forward pass, the backward pass uses matrix operations to compute gradients for the entire batch simultaneously. Doing it this way ensures that updates consider the combined information from all batch data and helps stabilize training.

Syncing Updated Weights Across Different Batches

One tricky part is how updated weights sync across batches during training. Each batch’s backward pass computes gradients which are then used to update the weights, usually through an optimizer like SGD (stochastic gradient descent).

Weights aren’t updated after every single data point, but rather after each batch. That means after processing batch 1 and updating weights, batch 2 uses those new weights for its forward pass. This cycle continues through the epochs.

This sequential batch-by-batch updating works well in simple contexts. In more advanced distributed training scenarios, mechanisms like parameter servers or gradient averaging across nodes handle syncing updated weights across multiple machines.

Wrapping Up

Batch neural network training relies on efficient matrix operations to handle both forward and backward passes for groups of data, not just individual points. Processing batches improves speed and stability during learning.

For more details about neural network training and batch processing, you might want to check out these resources:

– CS231n Convolutional Neural Networks for Visual Recognition

– Deep Learning Book by Ian Goodfellow

– PyTorch Tutorials on Batching and Autograd

Understanding this will not only help you grasp how modern frameworks work under the hood but also prepare you for implementing your own neural network training loops—even in less traditional programming environments.

So next time you train a batch neural network, you’ll know exactly how the forward pass, loss calculation, backward pass, and weight updates come together—making those complex math operations feel a little more approachable!