Why sticking to SSH tunneling might be the security sweet spot for your HomeLab setup

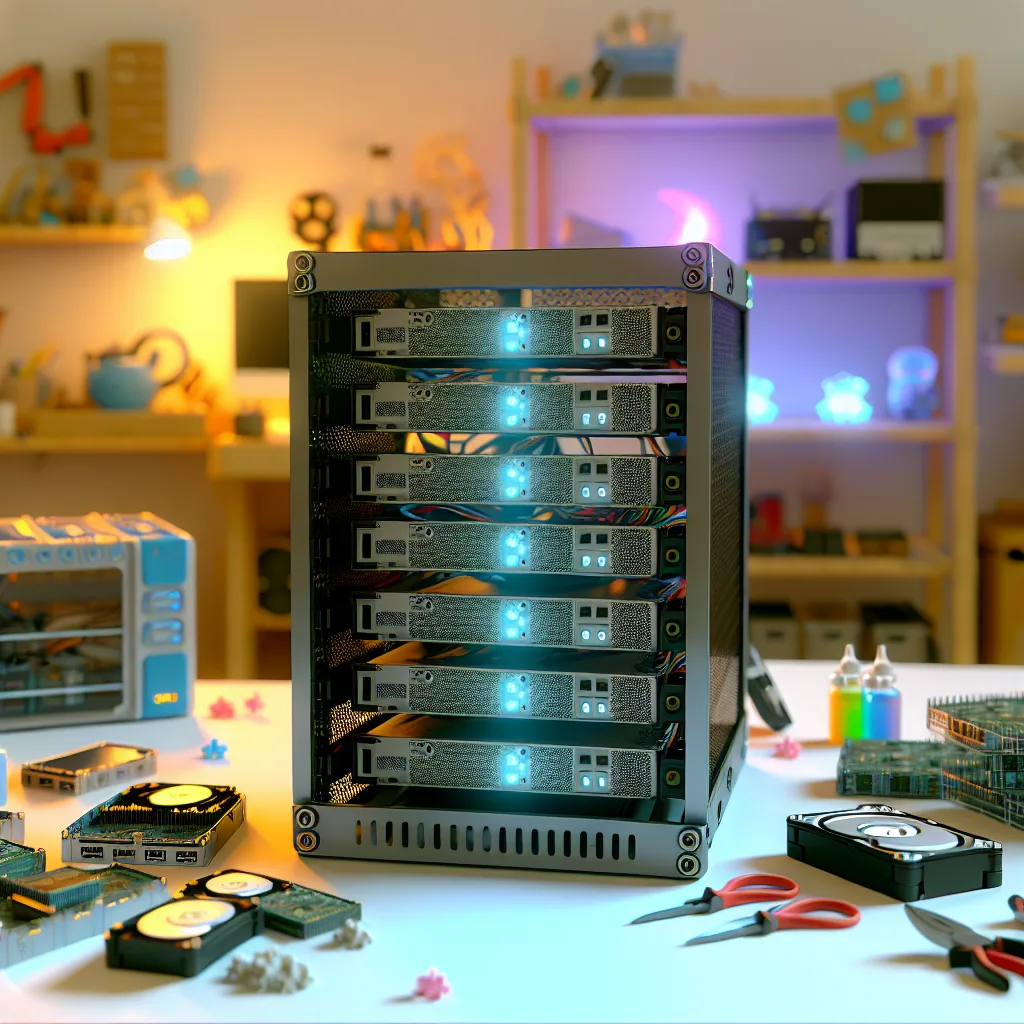

Setting up a home lab can feel a bit overwhelming, especially when it comes to security. If you’ve been wondering about home lab security and how to keep things simple without opening too many doors to the internet, you’re in good company. Today, I want to share some thoughts on why using SSH tunneling to access your home lab might be enough — without diving into complex reverse proxies and DNS configurations.

Why Focus on Home Lab Security?

Your home lab is your personal tech playground, but it’s also a potential target if you leave it wide open. The primary concern is preventing unauthorized access while still being able to connect remotely when you need to. A lot of people get caught up in fancy setups, but sometimes simplicity wins.

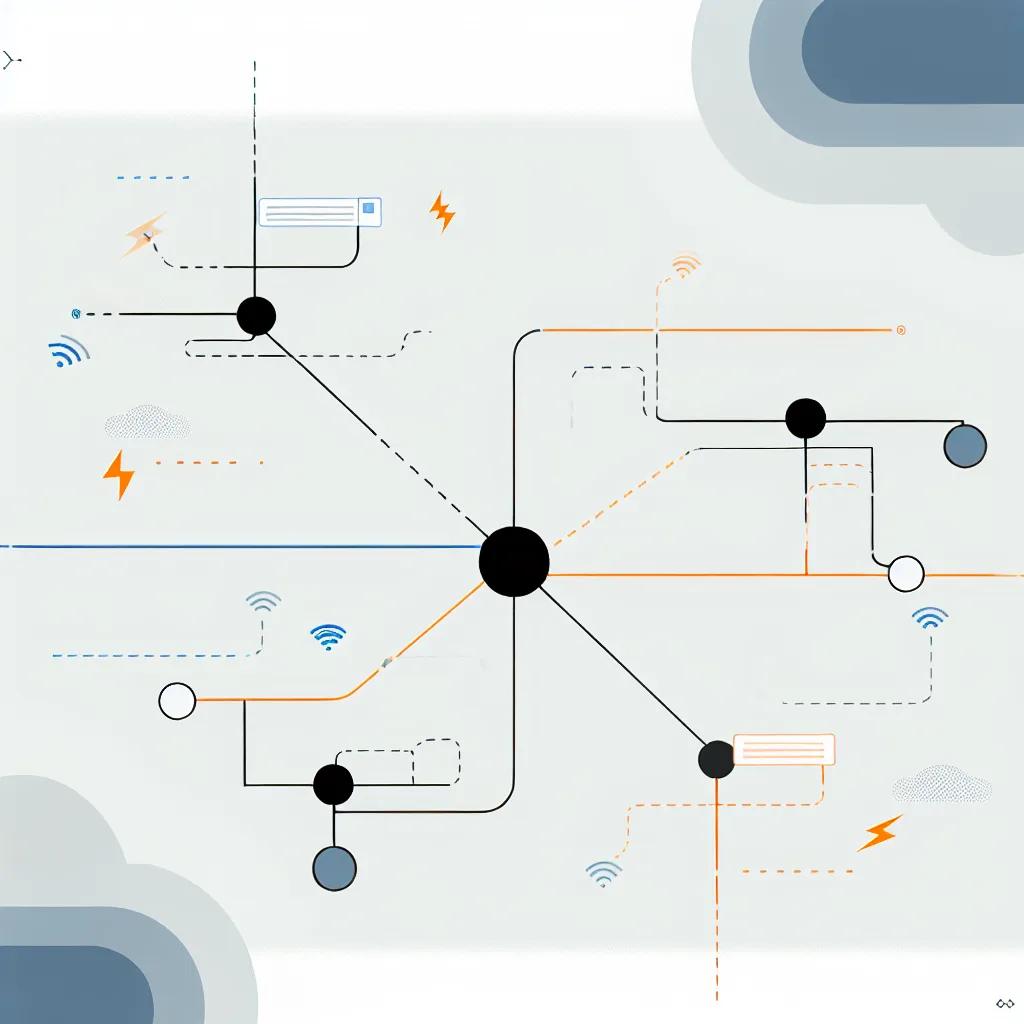

The Basics of SSH Tunneling for Home Lab Security

One straightforward method is to keep your public IP address limited in what it exposes. Rather than opening several ports to the internet, why not open only SSH? From there, you tunnel the ports you need through that single secure SSH connection.

This approach has some perks:

- Strong passwords and brute-force protection: A strong password combined with a tool or script that blocks IP addresses after a few failed attempts (in this case, just two tries and a 30-minute block) is a solid first line of defense.

- Fewer open ports: Reducing the number of visible open ports decreases the attack surface, which means fewer opportunities for attackers to find a vulnerability.

- No need for complicated reverse proxies or DNS configurations: This means less setup time and fewer things that could go wrong during setup.

If you configure your SSH properly, it can be a tremendously secure way to access your home lab remotely without the hassle of additional services. For those wanting to dive deeper, the official SSH documentation is a great place to start.

Are There Downsides to This Setup?

Sure, it’s not perfect. Here are a couple of things to watch out for:

- SSH brute-force attacks are common: So your brute-force blocking setup is vital. Make sure your blocking tool or firewall rules are reliable and test them occasionally.

- Strong authentication methods help: Beyond strong passwords, consider using SSH key pairs for authentication, which are much more secure and convenient.

- Monitoring is key: Keep an eye on your logs for any suspicious activity. Tools like Fail2Ban can automate blocking offenders.

When Should You Consider More Complex Solutions?

If you start running multiple services that need public access, or you require web interfaces and want them accessible via nice URLs, then a reverse proxy and DNS setup might be worth the effort. Services like Nginx or Traefik can help manage traffic securely and cleanly.

But if your main goal is remote access for a small number of services, tunneling through SSH remains a clean and effective method.

My Takeaway on Home Lab Security

I often find that many newcomers to home labs stress about security and overcomplicate things, which can slow down learning and experimentation. Starting with a secure SSH tunnel approach balances accessibility with solid protection.

Just remember to:

- Use strong, unique passwords or SSH key authentication.

- Employ brute-force protections to lock out repeated offenders.

- Regularly review your server logs and tweak your firewall rules.

If you cover those basics, your home lab security is in a good place without needing to dive into more complex network setups right away.

If you want to learn more about securing your home lab efficiently, the Home Lab Security Guide is a helpful resource. And for a deep dive into SSH and tunneling, check the Linuxize SSH Tunneling tutorial.

Final Thought

In the end, it’s about what makes you feel confident and works for your setup. Keep it simple, stay safe, and enjoy tinkering with your home lab!