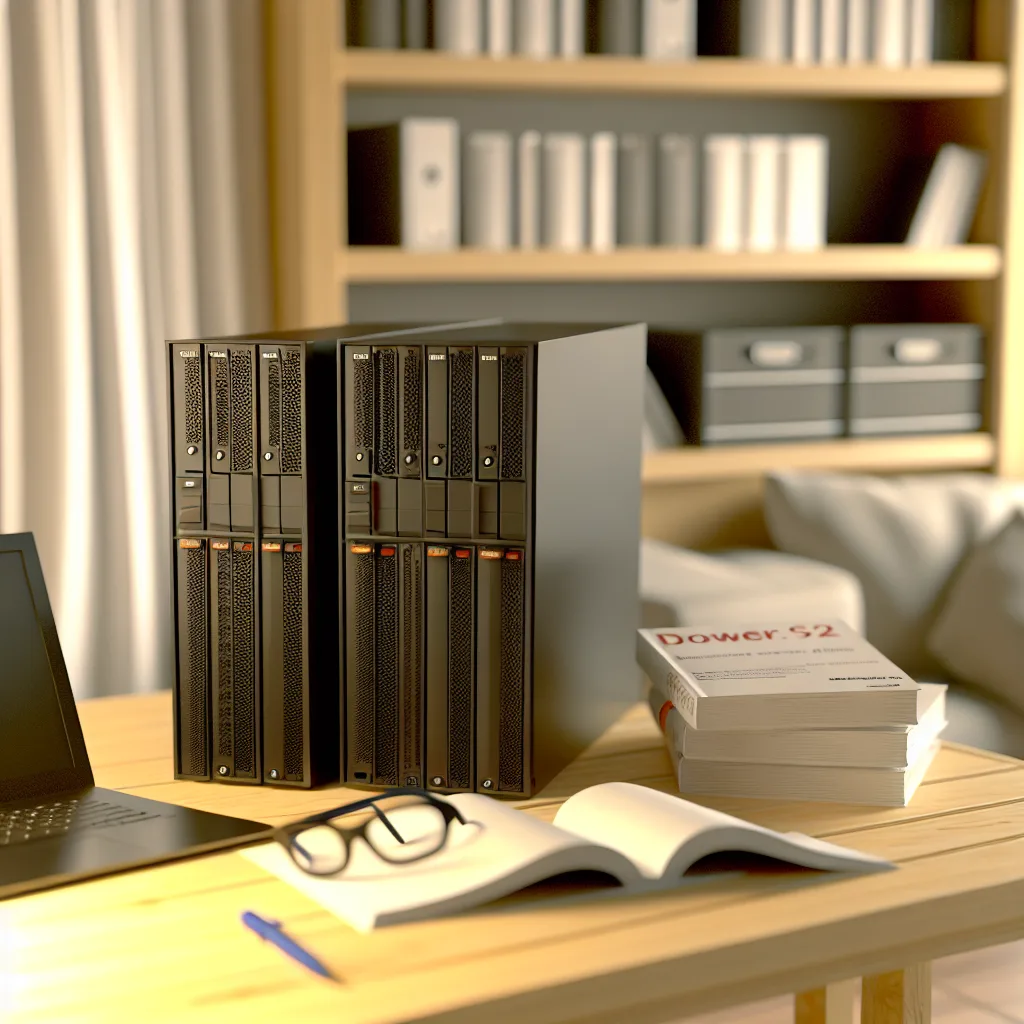

How I Scored Four Free Dell PowerEdge Servers and Why It Matters for Tech Enthusiasts

If you’re into home labs, running your own server, or just love geeky tech deals, finding free equipment can feel like hitting the jackpot. Recently, I came across an amazing score: free PowerEdge servers, specifically Dell’s PowerEdge R610s and 710s. Finding free PowerEdge servers is like winning a treasure chest for anyone wanting to explore server hardware without breaking the bank.

What Are PowerEdge Servers?

Dell’s PowerEdge line is a popular choice for businesses and tech enthusiasts alike. These servers—especially models like the R610 and 710—are known for their reliability and decent performance at an affordable price on the secondhand market. Whether you want to set up a home server, experiment with virtualization, or build a small business infrastructure, PowerEdge servers offer a great mix of power and flexibility.

Why Free PowerEdge Servers Are Such a Big Deal

Servers can be expensive, especially if you’re starting out or testing new projects. So, when you find free PowerEdge servers, it’s an incredible opportunity. It means you can save money while still gaining access to fairly powerful hardware. For instance, the Dell PowerEdge R610 is a rack server equipped to handle multiple processors and has good RAM capacity. The 710 series offers even more power with dual processors and great expandability.

How to Benefit from Free PowerEdge Servers

If you get your hands on free PowerEdge servers, you can:

– Build a cost-effective home lab for learning and experimenting.

– Set up a personal cloud or file server.

– Test out different operating systems or server roles like virtualization hosts using VMware or Proxmox.

These servers support many popular server OS options—Microsoft Windows Server, various Linux distros like Ubuntu Server or CentOS, and more. Dell’s official site provides excellent documentation on these models, helping you get started without a hassle (Dell PowerEdge Documentation).

Tips for Finding Free or Affordable Servers

- Keep an eye on local marketplaces or online classifieds. People often give away old business equipment when upgrading.

- Join tech swap groups or forums. Enthusiasts share tips or sometimes servers for free or low cost.

- Check out data center decommission sales. When companies retire older servers, you might grab a deal or even freebies.

Sites like eBay, Craigslist, or Facebook Marketplace sometimes list PowerEdge servers at very budget-friendly prices if freebies aren’t available. Having an understanding of server specs can help you spot good deals easily (eBay PowerEdge Listings).

The Bottom Line

Free PowerEdge servers are a sweet find for anyone curious about servers but who doesn’t want to spend a ton of money. They can serve as a learning platform and reliable hardware for running personal projects. It’s all about looking in the right places and understanding what you’re getting.

If you’re thinking about diving into server setups, keep an eye on local giveaways or online marketplaces. You might just find your own set of servers to tinker with, just like I did!

External Resources:

– Dell PowerEdge Official Site

– VMware Official Website

– Proxmox VE Documentation