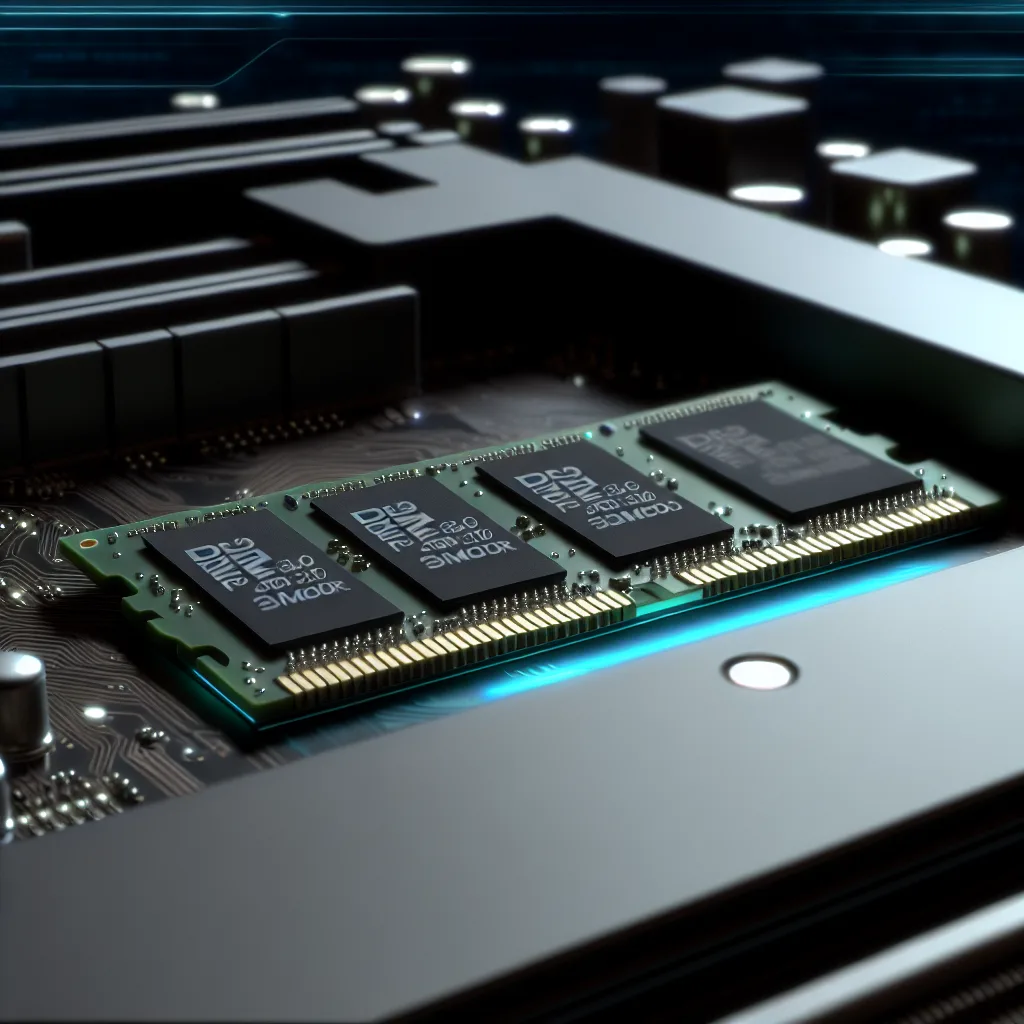

A close look at the unique DDR5 PMEM 300 memory module and the only motherboard that supports it

You might have heard about some cutting-edge computer components, but have you ever come across the DDR5 PMEM 300? This isn’t something you’ll find in just any PC. DDR5 PMEM 300 is a pretty rare memory module, to the point that only one motherboard currently supports it. It’s like a hidden gem in the world of computer hardware — fascinating, exclusive, and a bit mysterious.

What Is DDR5 PMEM 300?

DDR5 PMEM 300 is a type of persistent memory, blending the speed of DDR5 RAM with the benefits of persistent memory (PMEM) technology. Unlike traditional RAM, which loses its data when the power is off, PMEM modules retain information — kind of like having both quick memory and storage in one.

Why does this matter? For certain applications, especially those involving large databases or real-time analytics, having quick, persistent memory can make a significant difference. DDR5 PMEM 300, with its advanced DDR5 speeds combined with persistent memory tech, aims to deliver this balance.

The Unique Motherboard That Supports DDR5 PMEM 300

Here’s the kicker: the DDR5 PMEM 300 is so new and specialized that only one motherboard on the market supports it. This exclusivity means if you’re thinking about experimenting with this technology, your hardware choices are incredibly limited. This motherboard is specifically designed to handle the power demands and performance characteristics of DDR5 PMEM 300.

Considering the rarity, it’s a bit of a collector’s item or more likely, a tool for niche uses like research labs, high-end servers, or enthusiasts who like tinkering on the frontier of performance hardware.

Why Should You Care?

You might be wondering why this matters if it’s so niche. Well, tech enthusiasts and professionals keeping an eye on the future of computing need to understand these innovations as they mark the direction memory tech is heading.

Persistent memory like DDR5 PMEM 300 could become more commonplace as data workloads grow and systems need to be faster and more reliable. The combination of speed and persistence could mean less downtime and better data integrity.

Where to Learn More?

If you want to dive deeper into DDR5 technology and the rise of persistent memory, checking out the official specs from the JEDEC Standard is a great start. For the latest in motherboards and where to find hardware supporting DDR5 PMEM, the manufacturer’s website is a solid resource.

Also, communities like those on Tom’s Hardware offer discussions and real-world reviews that might give you some hands-on insight.

Final Thoughts

While DDR5 PMEM 300 isn’t something you’ll find in your everyday PC build, it represents an interesting step forward in memory technology. Its combination of speed and persistence, backed by a carefully engineered motherboard, shows where the future might be headed. Whether you’re a tech professional or just curious, it’s worth keeping an eye on developments like this—they’re glimpses into tomorrow’s computing landscape.

So, next time you’re thinking about memory upgrades, maybe take a moment to consider that memory technology is evolving in ways that blur the lines between RAM and storage, and DDR5 PMEM 300 is a perfect example.