My journey into the world of homelabs started with a tiny Orange Pi. Here’s how I built a small, personal server and what I learned along the way.

I have a confession to make. I’ve officially fallen down the homelab rabbit hole, and I’m not sure I ever want to get out.

It all started with a simple idea: to build a tiny, low-power server for a few personal projects. Nothing fancy, just a little box that could handle some basic tasks without sending my electricity bill through the roof.

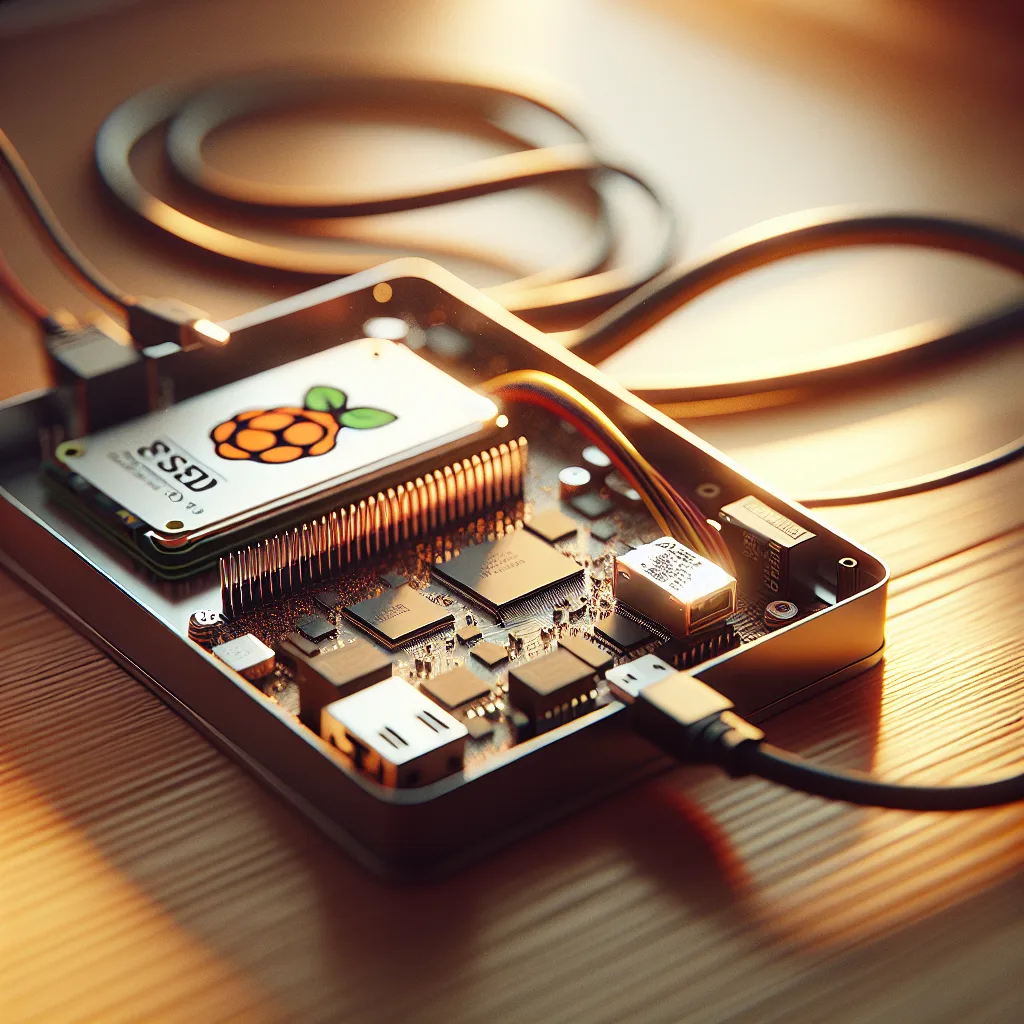

After lurking on some online forums, I decided to start small. Really small. I picked up an Orange Pi Zero 3, a single-board computer (SBC) that’s not much bigger than a credit card. It seemed perfect. Inexpensive, tiny, and just enough power for what I had in mind.

Or so I thought.

The Best Laid Plans

My initial plan was straightforward. I wanted to run a few services:

- Navidrome: To stream my personal music collection, like my own private Spotify.

- Homebox: To keep my home inventory and digital assets organized.

- A torrent client: For… well, for downloading Linux ISOs, of course.

I got the Orange Pi, installed Armbian (a lightweight operating system for these kinds of boards), and felt pretty good about myself. This was easy!

But then, reality hit. The cheap, generic SD card I was using for the operating system wasn’t cutting it. It was slow and I worried about its long-term reliability. So, my first “small” upgrade was a high-endurance Sandisk SD card designed for constant read/write operations.

Next came the storage. The little SBC has no native storage, so I needed a place to keep my music and files. I ended up with an Intel DC S3610 SSD—a 400GB datacenter-grade drive. Yes, you read that right. The SSD cost more than the computer it was plugged into. It felt a little absurd, but I wanted something solid and reliable.

My tiny, budget-friendly project was already getting less tiny and less budget-friendly. And I was loving every minute of it.

My Under-Desk Datacenter

So here it is, my little under-desk lab. It’s a humble setup, but it’s mine.

- The Brains: Orange Pi Zero 3 (1GB RAM model)

- The Boot Drive: Sandisk High Endurance 64GB SD Card

- The Storage: Intel DC S3610 400GB SSD

It’s a strange little beast, a mix of budget-friendly computing and enterprise-grade hardware, but it works. Most of the time.

Which brings me to my next lesson: heat.

These tiny computers sip power, but they can still get hot, especially when they’re working hard. My little Orange Pi, with its tiny heatsink, was not prepared for the 40°C (104°F) ambient temperatures of a summer afternoon. I’d find it would randomly crash right around noon.

The solution? It’s not elegant, but it works. I have the whole setup plugged into a smart plug. When it freezes, I just cut the power from my phone, wait ten minutes for it to cool down, and turn it back on. Problem solved. For now. A bigger heatsink or a small fan is probably next on the shopping list.

What I’ve Learned

Starting this project has been a fantastic learning experience. It’s one thing to read about self-hosting and another thing entirely to build, troubleshoot, and maintain your own server, no matter how small.

If I could do it all over again, the only thing I’d change is getting the 2GB RAM version of the Orange Pi. That extra gigabyte would provide a little more breathing room for running multiple services.

But regrets? I have none. I have my own private cloud for music and files, running silently under my desk on just a few watts of power. It’s a testament to how accessible this hobby has become.

If you’ve ever been curious about setting up your own server, don’t be intimidated. Start small. You don’t need a rack of enterprise gear. All it takes is a tiny board, a bit of patience, and a willingness to fall down a very deep, very rewarding rabbit hole. You might be surprised at what you can build.