Exploring the challenges and realities of inference costs in the evolving AI landscape

Let’s talk about cheap AI inference. It sounds like a great deal, right? Imagine the cost of running artificial intelligence models dropping so low it barely matters. For startups building new apps or interfaces on top of large language models (LLMs), this idea is tempting. They often use third-party AI models and try to make a profit reselling them, figuring the costs will keep dropping. But is that a reliable plan?

In my experience watching the AI space, cheap AI inference might not be as straightforward as it seems. Sure, for small tasks like quick queries or short document summaries, the costs can drop over time. But users quickly want more. They start expecting AI to do much bigger, heavier lifting — writing theses, making podcasts, designing business strategies, or even creating movies and video games. Advanced AI agents will be diving into longer tasks that involve processing billions of data tokens in a single go.

So, will inference costs actually get cheap enough to be a non-issue? There are two main possibilities:

- The cost of AI inference becomes so low it’s practically negligible.

- Or AI apps will always require a certain amount of compute power to stay sharp and competitive, which carries ongoing costs.

History reminds us to be cautious here. About 70 years ago, leaders predicted nuclear power would be “too cheap to meter.” That didn’t happen — and sometimes tech dreams don’t meet reality. If cheap inference were right around the corner, every time a new AI model launched, providers’ servers wouldn’t be stretched so thin.

A concept called Jevons Paradox might explain this. When something becomes cheaper to use, people end up using it more — in fact, often so much more that total resource use actually rises. Even if inference costs drop, soaring demand and increasingly complex applications like autonomous cars or industrial simulations will keep data centers buzzing and chips running hard.

This doesn’t mean cheaper inference isn’t helpful. If your startup is running AI on expensive cloud services or overpaying for GPUs, you could get edged out by competitors who find ways to cut costs. That’s why companies like Google have invested heavily in building their own custom AI processors rather than relying on costly third-party compute resources.

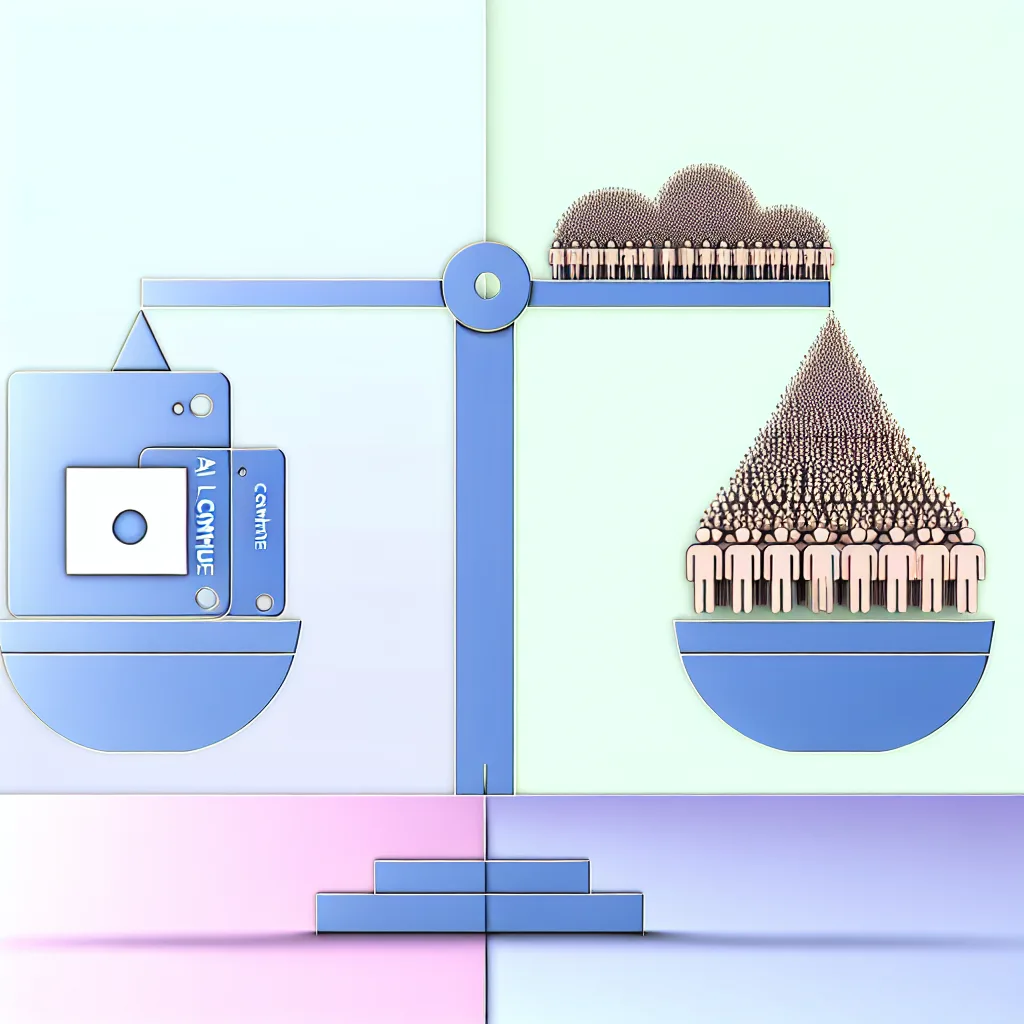

For AI startups and investors, the takeaway is to be realistic. Betting that inference costs will crash drastically might be risky. The demand for compute usually keeps pace with efficiency gains, creating a balancing act rather than a crash.

Want to dive deeper? Check out resources like OpenAI’s API pricing and NVIDIA’s AI computing platforms.

In the end, cheap AI inference is appealing — but the real story is more nuanced. It’s one to watch carefully if you’re building or backing AI-powered businesses.