Understanding how Lie groups underpin translation invariance in convolutional neural networks

If you’ve ever wondered why convolutional neural networks (CNNs) are so good at recognizing images regardless of slight shifts or rotations, you’re touching on the idea of Lie group representations. This concept might sound a bit heavy at first, but it’s actually the key to why CNNs work so well with natural signals like images, videos, and audio.

What Are Lie Group Representations?

Lie groups are mathematical objects that describe continuous transformations—think rotations, translations, and scalings that seem to flow smoothly rather than jump in steps. For example, imagine turning a photo slightly or sliding it sideways; these are transformations that form a Lie group. The way signals behave under these transformations can be captured by what we call Lie group representations.

Now, why does this matter for CNNs? CNNs are designed to be translation invariant, which means they can recognize patterns no matter where they appear in an image. This invariance isn’t accidental. It comes from the CNN essentially learning representations of the signal (the image) under a group action—the group being the set of translations and other transformations.

How Lie Group Representations Show Up in CNNs

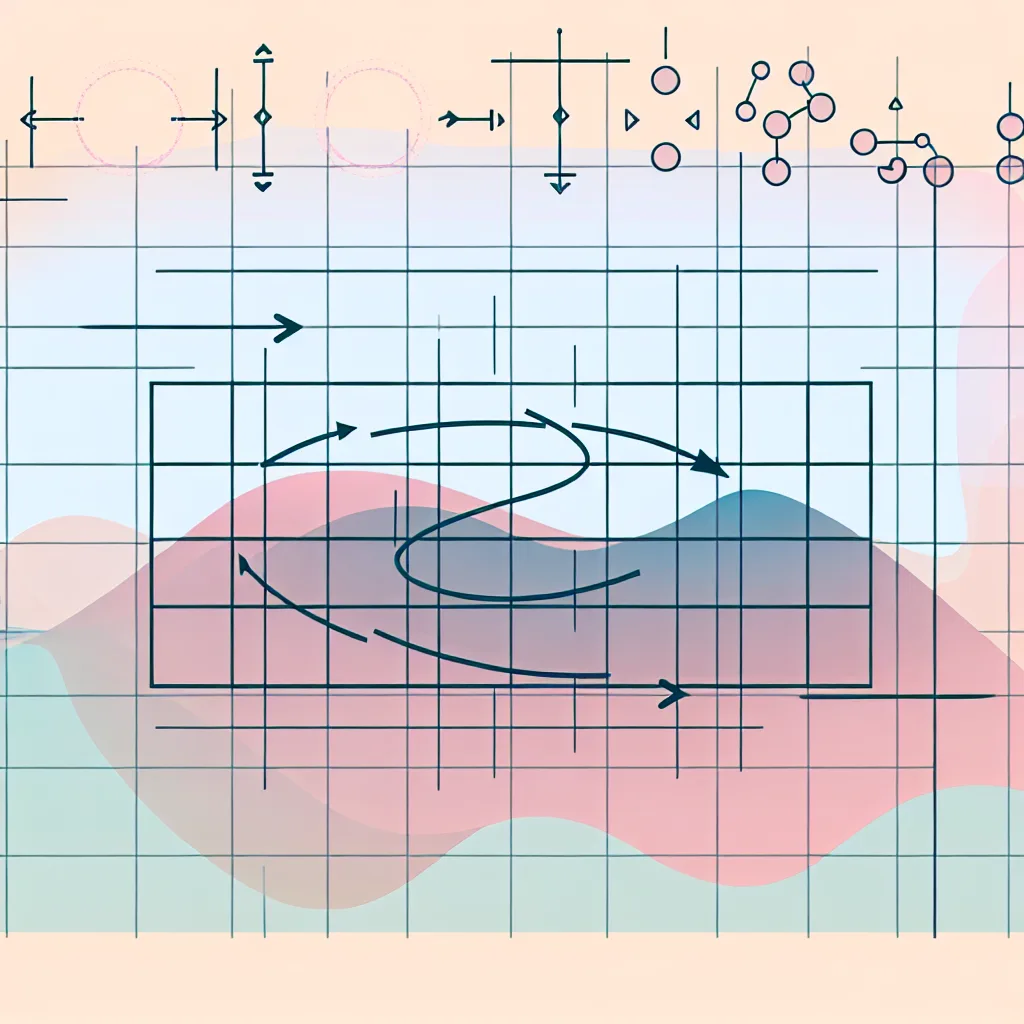

At its core, the convolution operation in CNNs can be thought of as a type of group convolution over the translation group. This means the filters in CNNs slide across the image, detecting features regardless of location, thanks to translation invariance.

Pooling layers then help summarize or project these features into something invariant, often by integrating or pooling over the group elements. This way, the network builds a stable understanding of the image content irrespective of exact positioning.

To give some context, this mathematical idea is closely related to well-established concepts like Fourier bases for the real line (R) or wavelets for square-integrable functions. CNNs are extending these ideas into more complex transformations relevant for images, such as those found in the special Euclidean group SE(2), which includes rotations and translations on the plane.

Why Translation Invariance Is So Important

Natural signals don’t just randomly occur; they tend to live on low-dimensional manifolds that remain stable under transformations like rotations, translations, and scalings. Imagine watching a video where the scene shifts slightly, or listening to audio that might have small timing differences. CNNs are able to generalize well because they inherently understand these transformations thanks to Lie group representations.

Diving Deeper: The Mathematical Soul of CNNs

This framework rooted in representation theory and harmonic analysis explains why CNNs capture essential features so robustly. If you want to explore this further, checking resources like the book “Group Representations in Probability and Statistics” or overview articles on group convolutions in neural networks can be valuable.

For a practical deep dive into the topic of group equivariant CNNs, the work by Taco Cohen and Max Welling is a recognized reference that applies these math concepts to modern neural network design.

By viewing CNNs through the lens of Lie group representations, it’s easier to appreciate the elegant math that empowers your favorite computer vision models. So next time your phone recognizes faces regardless of angle or lighting, you might think about the beautiful math quietly at work behind the scenes.

Further Reading & Resources

- Understanding Convolutional Neural Networks (CS231n, Stanford)

- Group Equivariant Convolutional Networks (Taco Cohen & Max Welling’s paper)

- Lie Groups and Lie Algebras – Wikipedia

Dive in, and you just might find a new appreciation for the math behind everyday AI!